Fulton Undergraduate Research Initiative (FURI)

What is FURI?

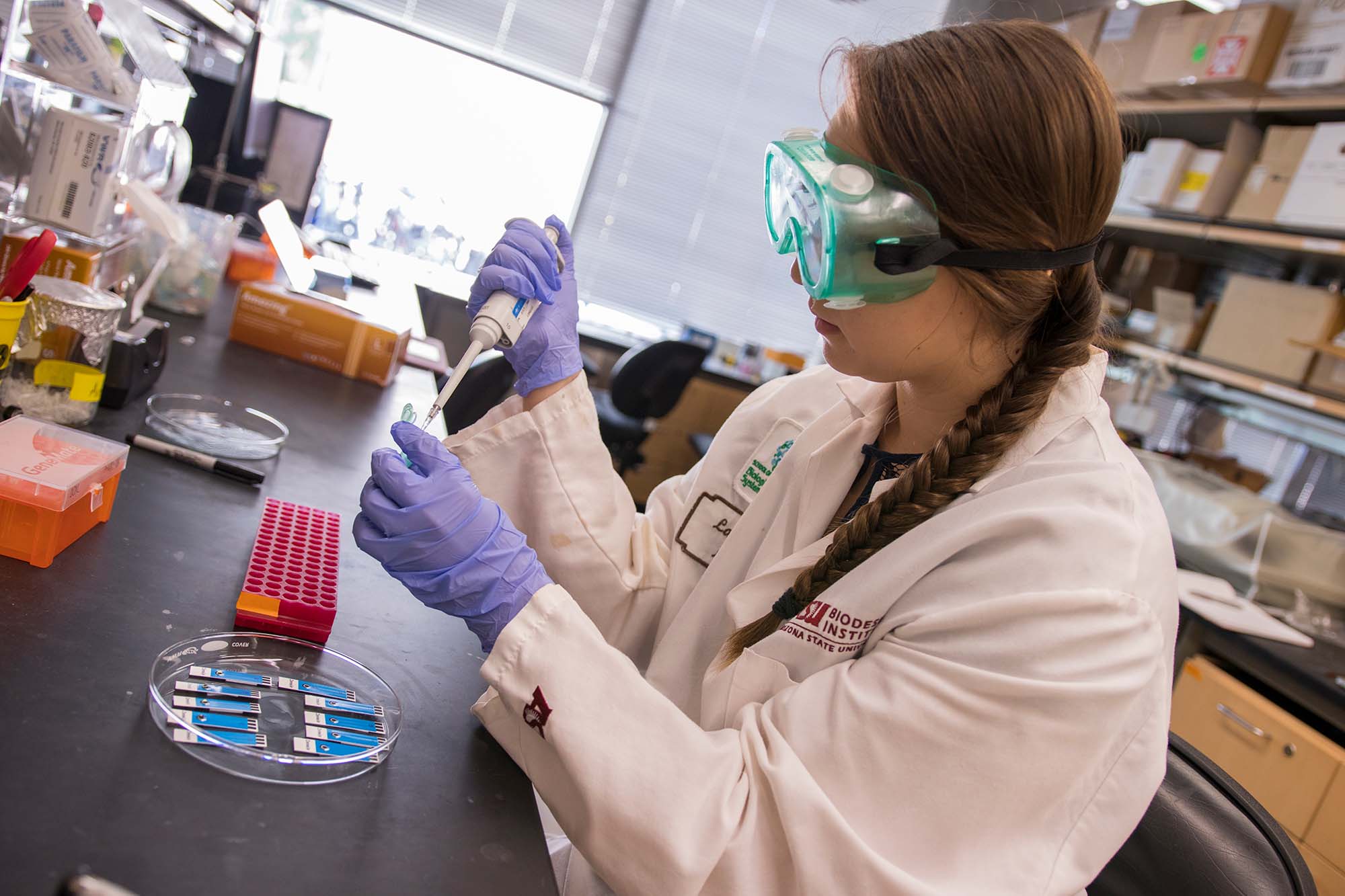

The Fulton Undergraduate Research Initiative (FURI) enhances the student engineering experience and technical education by providing hands-on lab experience, independent and thesis-based research and travel to national conferences.

Undergraduate students in the Ira A. Fulton Schools of Engineering are highly encouraged to pursue a research project during their studies. You will gain skills and abilities that will help you develop your interests and be attractive to future employers and graduate programs.

With FURI, you can…

- Contribute to the creation of new knowledge.

- Develop relationships with Fulton faculty members, graduate students, and post-doctoral researchers.

- Gain new knowledge outside of the classroom to help in the classroom.

- Improve your oral and written communication skills.

- Improve your critical thinking and analytical skills.

- Clarify your career goals and prepare for the next step after graduation.

Here’s how to get started.

- Explore your research interests and ensure it aligns with a Fulton Schools student research theme.

- Identify possible research mentors.

Visit your instructors during office hours. Talk about your interest in research and the research areas you are curious about. If they aren’t doing research in that field, they likely know who is. If additional assistance is needed, please email [email protected]. - Contact Fulton faculty members and prepare to talk with them.

- Refer to the FURI Application Scoring Rubric to prepare your application and use available resources including:

– Proposal Template.

– Visiting the Fulton Schools Career Center for help with your resume.

– Visiting the ASU Writing Center or ASU Library for help with your personal statement and research proposal. - Complete the FURI application.

FURI gave me a glimpse into the work that I do now. My project was centered around travel demand forecasting and trip user modificated based on the introduction of high-speed rail on the West Coast. While I do not directly work on projects related to high-speed rail design and construction, the methodology, research methods and engineering judgement I developed during the project is very applicable to my daily tasks at work. I am thankful for the opportunity to work on my FURI project, which helped give me exposure to research, earn transferrable knowledge for my career and develop technical engineering skills.

Amanda MinutelloFURI Fall ’19, civil engineering Spring ’20, graduate traffic engineer at AECOM

Eligibility information

Undergraduate students in the Ira A. Fulton Schools of Engineering can be funded in FURI, beginning of their second semester of attendance at ASU until their last semester. The FURI program operates during summer, fall, and spring semesters.

Students are eligible for up to two semesters of funding. All applicants must be undergraduate students in the Ira A. Fulton Schools of Engineering and in good academic standing to be eligible for FURI. Online students are also encouraged to apply. All mentors must be faculty with appointment in Fulton Schools, and not on sabbatical or extended leave.

Note: As of Summer 2021 we are unable to support projects that are planned to be conducted remotely outside of the U.S. Funded projects can be carried out remotely, so long as the student researcher is currently within the United States.

Questions? Contact Trudi VanderPloeg, FURI Program Administrator, at [email protected].

Funding available

FURI students receive a stipend of $1,500 (less any applicable taxes) for their funded semester(s), which will be disbursed at the end of the semester. Participants are eligible to apply for up to $400 in research supplies per funded semester. You can also explore additional funding from your faculty mentor’s grants or other sources.

Proposal cycles

Proposals are due the third Wednesday of every March and October.

Contact us

Reach out at [email protected] for general inquiries. The FURI office is located at ECF 130.